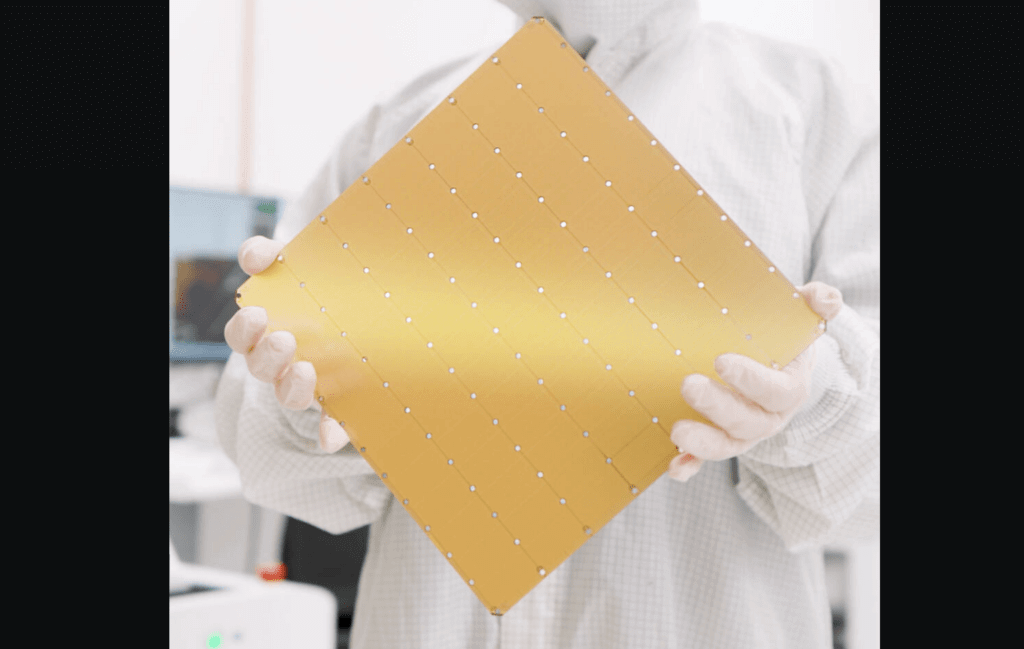

Cerebras Systems introduced its Wafer Scale Engine 3 chip boasting 4 trillion transistors and 125 petaflops of peak AI performance through 900,000 AI optimized compute cores.

With a huge memory system of up to 1.2 petabytes, the CS-3 is designed to train next generation frontier models 10x larger than GPT-4 and Gemini. 24 trillion parameter models can be stored in a single logical memory space without partitioning or refactoring, dramatically simplifying training workflow and accelerating developer productivity.

Key Specs:

- 4 trillion transistors 900,000 AI cores 125 petaflops of peak AI performance

- 44GB on-chip SRAM

- 5nm TSMC process

- External memory: 1.5TB, 12TB, or 1.2PB

- Trains AI models up to 24 trillion parameters

- Cluster size of up to 2048 CS-3 systems

“When we started on this journey eight years ago, everyone said wafer-scale processors were a pipe dream. We could not be more proud to be introducing the third-generation of our groundbreaking water scale AI chip,” said Andrew Feldman, CEO and co-founder of Cerebras.“ WSE-3 is the fastest AI chip in the world, purpose-built for the latest cutting-edge AI work, from mixture of experts to 24 trillion parameter models. We are thrilled for bring WSE-3 and CS-3 to market to help solve today’s biggest AI challenges.”